Bot traffic has quietly become one of the biggest challenges for website owners, marketers, and businesses operating online. Whether you run a small blog, an eCommerce store, a SaaS platform, or a large enterprise website, bots are almost certainly visiting your site every day. Some of them help your website function better and appear in search results. Others silently drain your resources, distort your data, steal content, or even attempt to break into user accounts.

Understanding bot traffic is no longer optional. Without a clear strategy to identify and control it, businesses risk losing money, compromising security, and making poor decisions based on polluted analytics.

This guide walks you through everything you need to know about bot traffic:

- what it is,

- where it comes from,

- how it affects your website,

- and most importantly, how to stop bad bots without harming legitimate users or search engine visibility.

1. Why Bot Traffic Is a Growing Problem

The modern internet runs on automation. From search engines indexing billions of pages to monitoring tools checking website uptime every minute, bots are everywhere. However, as businesses have moved more revenue, data, and user activity online, malicious bot operators have followed.

Bot traffic has increased dramatically due to:

- Cheap cloud computing

- Easy access to automation tools

- Large networks of compromised devices (botnets)

- Growth in online advertising, APIs, and digital transactions

For many websites, bots now account for a significant portion of total traffic—sometimes more than human visitors. The problem is not just volume. Bad bot traffic can inflate analytics, slow down websites, drain ad budgets, scrape proprietary data, and expose security vulnerabilities.

This article provides a complete, practical understanding of bot traffic, from fundamentals to advanced mitigation strategies, so you can protect your website without harming performance or user experience.

2. What Is Bot Traffic?

Bot traffic refers to visits to a website generated by automated software rather than real human users. These automated programs, known as bots, are designed to perform specific tasks such as crawling pages, submitting forms, clicking links, or requesting data at scale.

Unlike humans, bots:

- Operate continuously without breaks

- Can make thousands of requests per second

- Follow scripts rather than natural browsing behavior

- Often disguise themselves as legitimate users

Bots access websites through browsers, headless browsers, scripts, or APIs. Some identify themselves clearly, while others intentionally hide behind fake user agents, rotating IP addresses, or residential proxies to avoid detection.

It is important to understand that bot traffic itself is not inherently bad. The internet depends on certain bots to function properly. The real issue lies in distinguishing helpful automation from malicious or abusive activity.

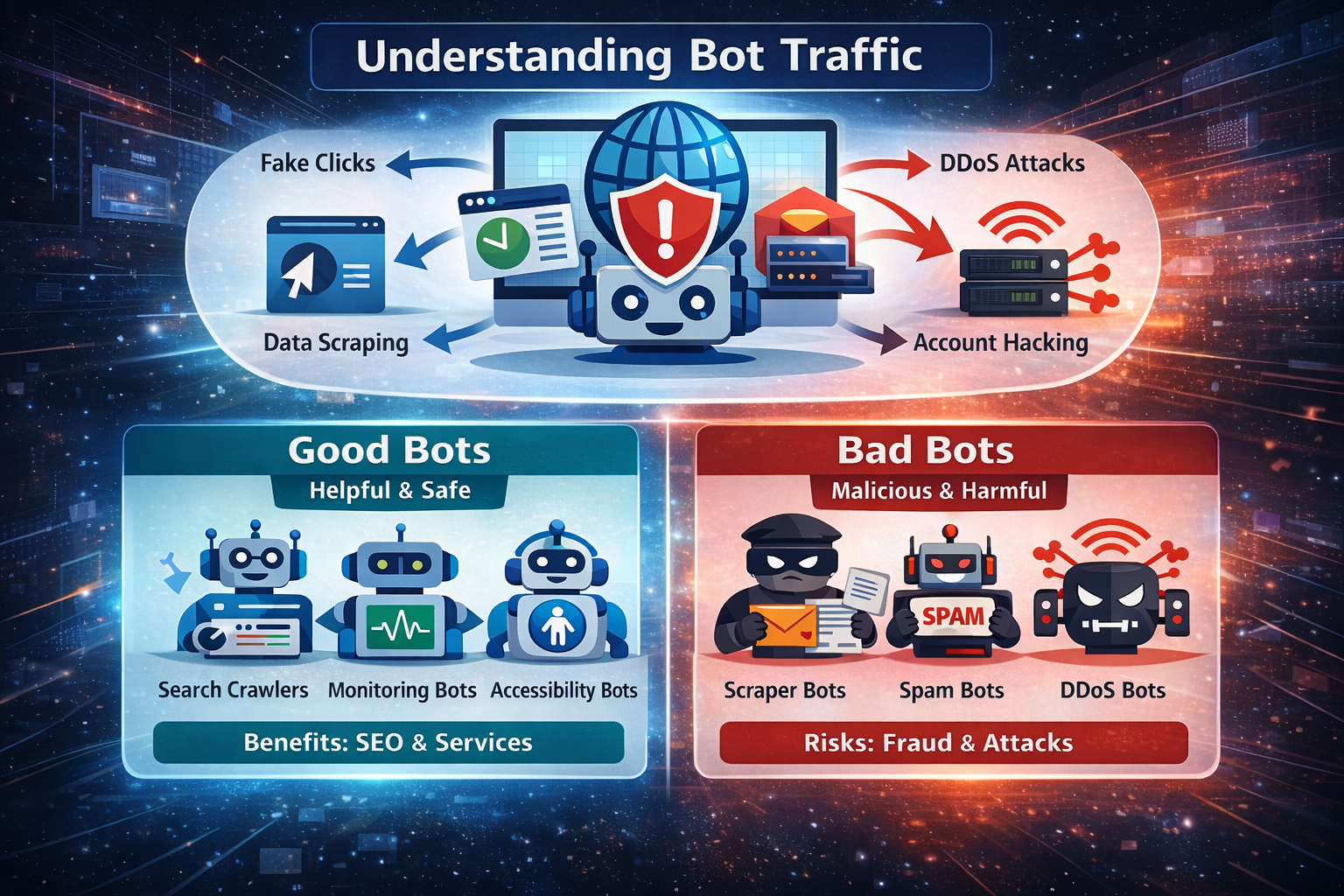

3. Types of Bot Traffic: Good Bots vs Bad Bots

Not all bots should be blocked. In fact, blocking the wrong bots can severely damage your website’s visibility and functionality. The key is knowing the difference between good bots and bad bots.

3.1 Good Bots

Good bots perform useful and often essential functions. They follow rules, respect website policies, and generally do not attempt to cause harm.

Common examples of good bots include:

- Search engine crawlers that index your pages and help them appear in search results

- Monitoring bots that check website uptime, performance, and errors

- Accessibility bots that assist users with disabilities

- Analytics and testing bots used by developers and QA teams

Good bots typically:

- Identify themselves honestly

- Respect robots.txt directives

- Crawl at reasonable speeds

- Do not target sensitive areas like login or checkout pages

Blocking good bots can lead to poor search engine visibility, broken integrations, and incomplete performance monitoring.

3.2 Bad Bots

Bad bots are designed to exploit, abuse, or manipulate websites for financial gain, data theft, or disruption.

Common types of bad bots include:

- Scraping bots that steal content, pricing, or product data

- Credential-stuffing bots that attempt account takeovers using leaked passwords

- Spam bots that flood forms, comments, and registrations

- Click fraud bots that waste advertising budgets

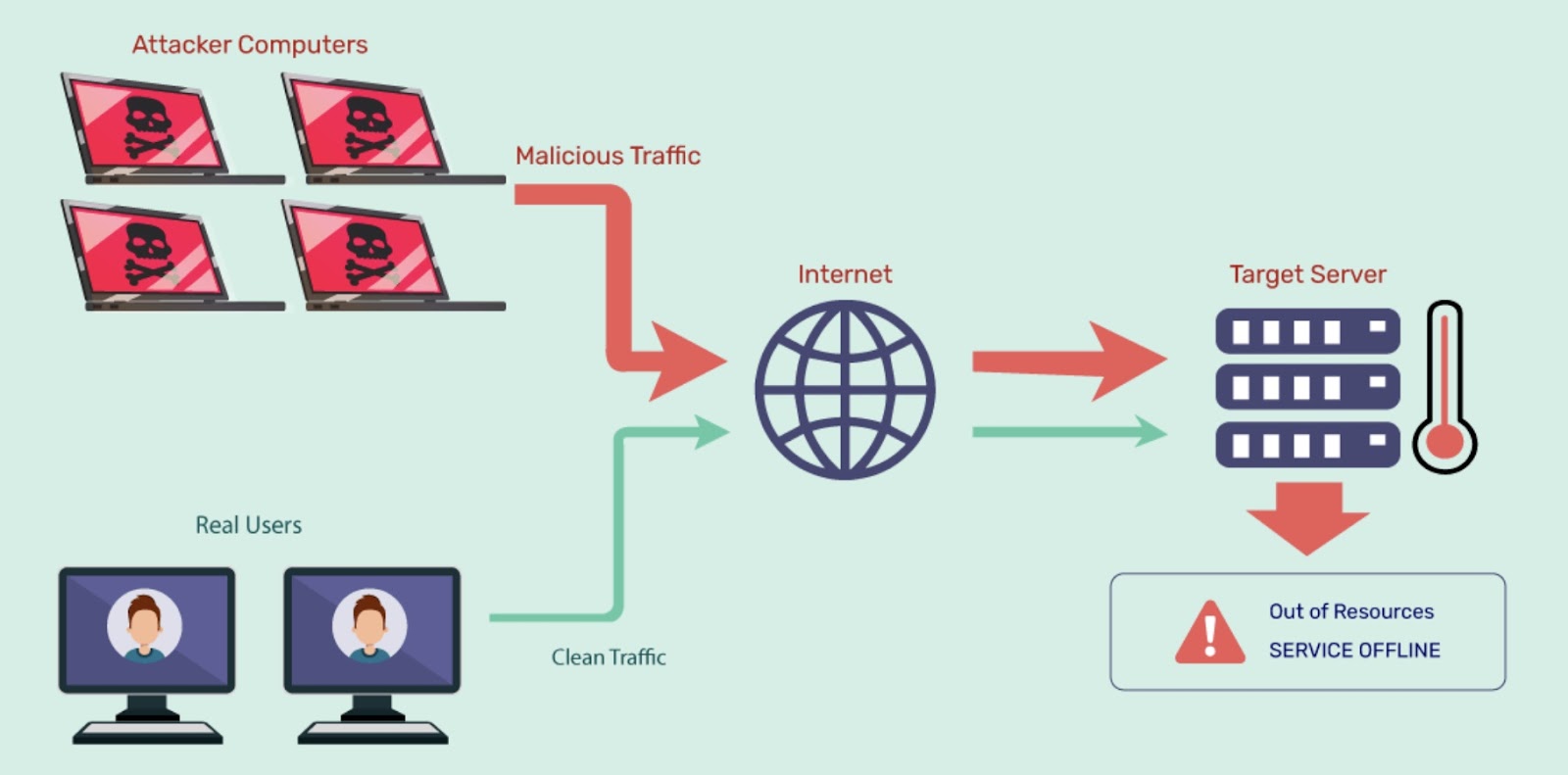

- DDoS bots that overwhelm servers with traffic

- Brute-force bots that attack login systems

These bots often mimic real users by rotating IPs, spoofing browsers, and behaving just human-like enough to bypass basic defenses.

4. Common Sources of Bot Traffic

Understanding where bot traffic comes from helps explain why it is so difficult to stop.

Many bad bots originate from:

- Botnets, which are networks of infected devices controlled remotely

- Cloud hosting providers, where attackers can spin up servers quickly

- Residential IP networks, which make bots appear like normal home users

- Free proxy and VPN services, used to hide real locations

Geographically, bot traffic can appear to come from anywhere in the world. Modern bot attacks often use globally distributed IP addresses, making simple country-based blocking ineffective and risky.

5. How Bot Traffic Impacts Your Website and Business

Bot traffic does more than inflate visitor numbers. Its real impact is often hidden and cumulative.

5.1 Performance and Hosting Costs

Bad bots can:

- Consume server resources

- Increase bandwidth usage

- Slow down page load times

- Cause timeouts or crashes during traffic spikes

This leads to higher hosting bills and poor user experience for real visitors.

5.2 SEO and Analytics Damage

Bot traffic can seriously distort your data:

- Artificially high traffic numbers

- Extremely high bounce rates

- Zero session duration

- Skewed conversion tracking

When analytics are polluted, businesses make wrong decisions about content, marketing, and UX.

5.3 Advertising and Revenue Loss

For sites running paid ads, bots can:

- Generate fake impressions

- Click ads without intent to convert

- Drain daily budgets

- Reduce ROI and performance metrics

Click fraud is one of the most expensive consequences of unchecked bot traffic.

5.4 Security Risks

Some of the most dangerous bots target security weaknesses:

- Account takeovers

- Data breaches

- API abuse

- Website defacement

Even a small vulnerability can be exploited at scale when automated bots are involved.

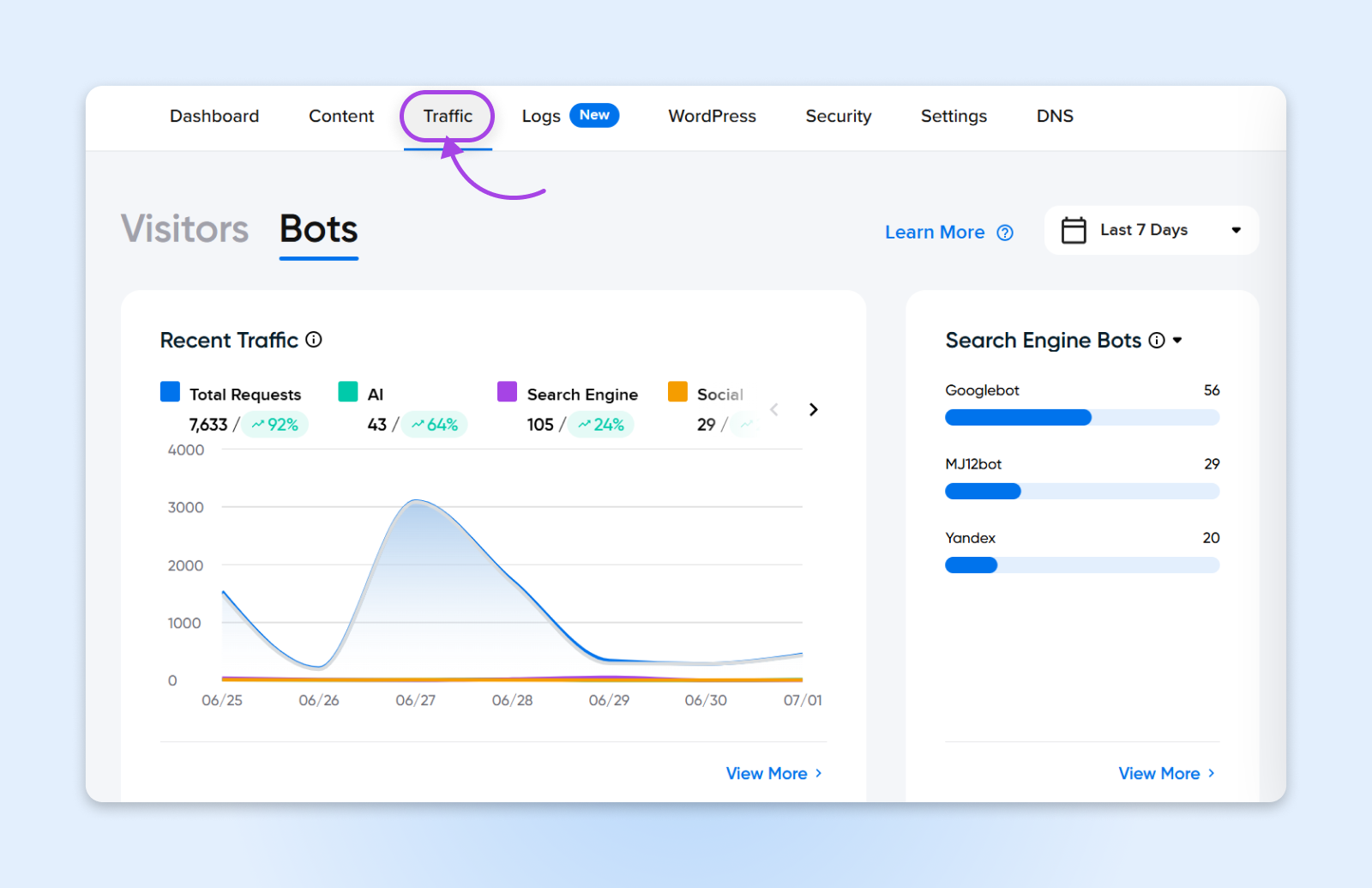

6. How to Identify Bot Traffic on Your Website

Detecting bot traffic is the first step toward controlling it.

Common warning signs include:

- Sudden traffic spikes without corresponding sales or leads

- Sessions with zero engagement and instant exits

- High traffic to login, checkout, or API endpoints

- Repeated requests from the same IP ranges

- Strange user agents or outdated browsers

- Unusual traffic at odd hours with no geographic logic

Tools used to identify bot traffic include:

- Web analytics platforms

- Server access logs

- Security dashboards

- Behavior flow and session recordings

The key is pattern recognition—bots behave consistently in ways humans do not.

7. Common Myths About Bot Traffic

There are several misconceptions that prevent businesses from addressing bot traffic properly.

One common myth is that all bots are bad. In reality, many bots are essential to your site’s success.

Another myth is that CAPTCHAs stop all bots. While CAPTCHAs help against simple automation, advanced bots can often bypass them.

Many small businesses believe they are too small to be targeted. In practice, automated bots scan the entire internet, not just large websites.

Finally, relying solely on firewalls is not enough. Static rules alone cannot keep up with evolving bot behavior.

8. How to Stop and Prevent Bad Bot Traffic

Stopping bad bots requires a layered approach rather than a single solution.

8.1 Use a Web Application Firewall (WAF)

A WAF filters incoming traffic before it reaches your website. It can block known malicious patterns, suspicious IPs, and abnormal requests. A properly configured WAF acts as your first line of defense.

8.2 Implement Rate Limiting and Request Throttling

Rate limiting restricts how many requests a single user or IP can make in a given time. This is highly effective against brute-force attacks, scraping, and API abuse.

8.3 Use CAPTCHAs and Challenge-Response Tests

CAPTCHAs help verify that a visitor is human. They are best used selectively on:

- Login pages

- Forms

- Checkout flows

- Password reset pages

Overuse can hurt user experience, so balance is essential.

8.4 Block Suspicious IPs and User Agents

Blocking known malicious IP ranges and fake user agents can reduce noise. However, this must be updated regularly, as attackers rotate infrastructure frequently.

8.5 Analyze Behavior, Not Just Identity

Modern bot protection focuses on behavior:

- Mouse movement patterns

- Session depth

- Time between actions

- Interaction consistency

Behavioral analysis is much harder for bots to fake at scale.

9. Advanced Bot Mitigation Strategies

For high-risk or high-traffic websites, advanced defenses may be required.

These include:

- Machine-learning based bot detection

- Device and browser fingerprinting

- JavaScript challenges that test execution capabilities

- Honeypots that trap automated scripts

- API-specific authentication and token validation

Advanced strategies focus on adaptability rather than static rules.

10. Bot Traffic and SEO: What You Need to Know

Bot management must be handled carefully to avoid harming SEO.

Search engine crawlers need access to your content to index it properly.

Blocking them can:

- Reduce crawl frequency

- Prevent new pages from being indexed

- Harm rankings

Best practices include:

- Allowing verified search engine bots

- Using robots.txt correctly

- Monitoring crawl activity

- Avoiding aggressive blocking rules

The goal is precision—blocking bad bots without interfering with legitimate crawlers.

11. Monitoring and Maintaining Bot Protection Over Time

Bot protection is not a one-time setup. Attack patterns evolve constantly.

Ongoing maintenance should include:

- Regular review of traffic patterns

- Updating firewall and rate-limit rules

- Monitoring logs for anomalies

- Testing defenses after site updates

- Adjusting thresholds during campaigns or traffic spikes

Continuous monitoring ensures defenses stay effective without hurting real users.

12. Future of Bot Traffic: What to Expect

Bot traffic is becoming more sophisticated. AI-powered bots can already mimic human behavior with increasing accuracy.

Future trends include:

- More advanced scraping and fraud bots

- Increased targeting of APIs and mobile apps

- Smarter evasion of traditional defenses

- Greater reliance on behavioral and AI-based detection

Websites that rely only on static rules will struggle. Adaptive, intelligent security will become the standard.

Conclusion: Turning Bot Traffic From a Threat Into a Managed Risk

Bot traffic is an unavoidable reality of the modern internet. While some bots are essential and beneficial, others pose serious risks to performance, security, revenue, and data accuracy.

The key is not eliminating bot traffic entirely, but managing it intelligently. By understanding how bots operate, identifying harmful patterns, and implementing layered defenses, businesses can protect their websites without sacrificing user experience or search visibility.

With the right strategy in place, bot traffic becomes a controlled variable rather than a constant threat—allowing you to focus on growth, performance, and real human users.